The rate of technological advancement in the US has been decelerating since around 1970, over a half-century. This is likely the most alarming and important trend in modern American society. And the resulting lack of growth is manifested in society by a general pessimism about the future, declining standards of living, and antipathy toward the technology industry. But the root cause of this pernicious state of stagnation is more rarely discussed—innovation has been continuing, but its impact on society is smaller. Slower growth means people are fighting over an increasingly fixed pie, creating more conflict. This, coupled with a rising debt-to-GDP ratio, does not seem like a formula for future prosperity. The solution is building technology that does have a significant impact on productivity. And lately, people have been turning toward AI as our potential savior.

The Half-Century Stasis

TFP, or total factor productivity, measures the ratio of aggregate output (GDP) to aggregate input and quantifies the impact of technological innovation. American Economist Robert Gordon estimates the average annual growth rate of TFP to be .48 between 1890-1920, 1.89% between 1920-1970, and .65% between 1970-2014. The Bureau of Labor Statistics has more recently estimated 2014-2022 TFP average annual growth to be a sluggish ~.5%. It’s sensible to be skeptical about any high-level economic metric, as measuring them can be error-prone. So it may be more helpful to do a limited analysis of some of the largest US industries and compare technological advancements across the last two half-centuries to show the stark disparity.

Energy

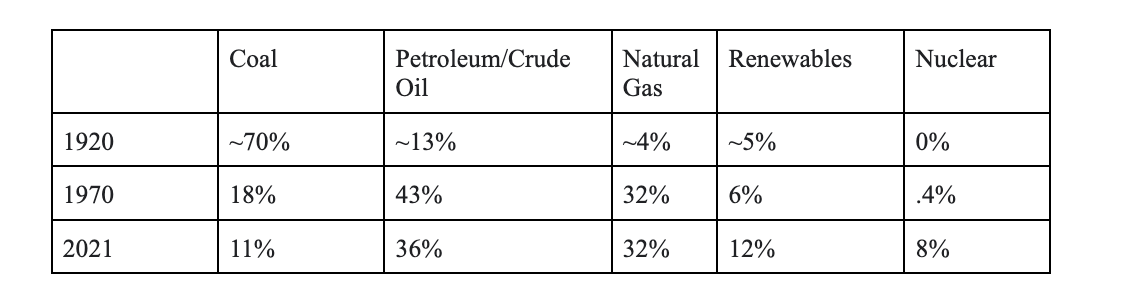

Between 1920-1970, the US ushered in the atomic era unlocking the power of nuclear energy and nuclear weapons. US energy production also underwent a radical shift away from coal to petroleum and natural gas. After the next half-century between 1970-2020, the US has yet to power a significant portion of its economy through fission and is much farther away from understanding how to implement fusion at scale. What we have done, instead, is incrementally scaled various forms of renewable energy and nuclear fission while also building better energy storage systems. The US certainly has long-term net-zero emission goals but has been remarkably slow in advancing them.

Life Expectancy & Healthcare

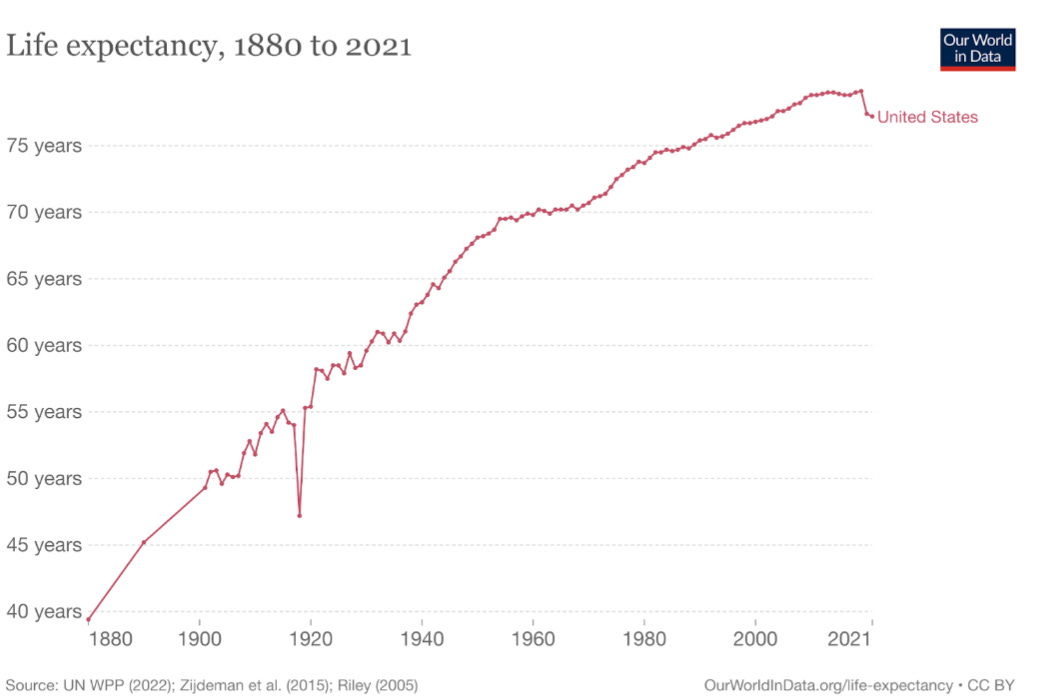

Growth in life expectancy seems to be a reliable high-level metric in analyzing healthcare and medical progress. And life expectancy growth in the US has plateaued over the last several decades. Much of the initial development was due to decreasing infant mortality rather than curing chronic diseases, but there were also many real, powerful public health and medical advancements. Between 1870-1920, the US initiated health standards, significantly lowering mortality rates. Water was no longer contaminated, and food became safer and more nutritious. Between 1920-1970, the US developed antibiotics to combat infectious diseases, better diagnostic imaging, treatments like chemotherapy and radiation to treat cancer, and methods to treat cardiovascular disease.

Medical advancements have slowed since 1970, and cancer serves as a good case study. Nixon signed The National Cancer Act in 1971 to start ‘The War on Cancer.’ Over half a century and $200B later, cancer is the second greatest cause of death in the US. The American Cancer Society states that the 5-year relative survival rate for all cancers combined has increased from 49% for diagnoses during the mid-1970s to 68% for diagnoses during 2012-2018. This 19% improvement is a result of more frequent screenings and better treatments. But the US is still primarily using the same invasive modes of treatment: surgery, chemotherapy, and radiation. We developed novel targeted therapies, but they remain more niche.

Chronic diseases like cancer and Alzheimer’s seem harder to cure, but it’s not impossible. And the healthcare system in the US, as well as popular sentiment, don’t seem well positioned to tackle this goal. Healthcare is systematically misaligned in several ways leading to rising costs for lower quality of care. A prevailing consumer sentiment today seems to be that 70-90 years is the ‘natural’ age to die, that there’s not much use investigating longevity, and that no one really wants to live for much longer (even assuming a high quality of life). But not wanting to cure cancer seems to be a terrible and even anti-human idea.

Transportation

Between 1920-1970, the US advanced beyond using horses for traveling, mass-produced automobiles, and introduced commercial air travel. Since 1970, commercial air travel hasn’t changed much technologically. It still takes 6 hours to get cross-country. Flights have gotten much safer through an incremental cycle of flying more miles, accumulating data from accidents, and fixing bugs. Prices have also fallen significantly, but mostly due to deregulation and intense competition. On the vehicle side, electric vehicles have gained market share as battery technology has improved and environmental concerns have grown. But less than 1% of the vehicles in the US are electric. Autonomous vehicles have recently been deployed for commercial use, but at a small scale and with limited use.

Aerospace & Built World

Between 1920-1970, the US launched the Apollo Missions to get to the Moon, creating the space industry. We developed jet engines, supersonic flight, and guided missiles. Since 1970, we’ve yet to return to the moon or venture out to any other planet. We have, however, developed drones and, remarkably, reusable rocketry. The built world has maybe been the most visibly depressing in its lack of progress. Between 1920 and 1970, the buildings gained plumbing, electricity, AC & heat, telephones, and most kitchen appliances. After 1970, they look about the same sans screens and fashion choices.

Computers & Information Technology

Computers & IT is the industry that has seen the most growth since the 1970s. When we think of the innovation hub of the US, we think of Silicon Valley. When we think of technology, we think of computers and software. These concepts have become synonymous. Between 1920-1970, the US developed the TV, transistor, integrated circuits, mainframe computers, time-sharing systems like Multics, and the first programmable electronic computers like the Eniac. This was followed by further milestones like Engelbart’s Demo, Unix, and high-level programming languages. 1970-2022 saw continued, significant progress in computing: the rise of several important technology paradigms like personal computing, the internet, mobile computers, cloud computing, and AI.

Potential Explanations

We need a good explanation for why technology has had less impact for the past half-century. It’s very difficult to figure out causation for large, complex systems, and many factors have likely contributed. The below is speculation of some of the potential explanations.

It’s Just the Nature of Technology

The most common explanation is also the most pessimistic: that all the innovations before 1970 were the low-hanging fruit, and what remains are the most challenging problems that no one has solved. Electricity and antibiotics are only invented once. This means that stagnation was inevitable, and it, coincidentally, frees us all of fault—it doesn’t matter what we do, as this is the fundamental nature of things. I’m not particularly keen on this explanation, as too many other confounding variables must be eliminated before claiming we have no agency. Not building nuclear reactors was a choice.

British Physicist David Deutsch explains that it’s difficult or impossible to know what will be significant until we understand a novel explanation and look back with hindsight. He recounts past instances where scientists like Joseph-Louis Lagrange and Albert Michelson believed that the main laws governing the universe have all been discovered, the low-hanging fruit plucked, and all that’s left to do is incremental work. They were soon proven wrong, and their failure was one of imagination.

Lagrange stated that Newton was not only the greatest genius who ever lived, but also the luckiest, for the system of the world can be discovered only once. Lagrange never lived to realize his work, which he saw as just a translation of Newton’s, was a step toward replacing Newton’s system of the world. Michelson also claimed in 1884 that the most important fundamental laws have all been discovered and are so firmly established that the possibility of changing them is exceedingly remote. In 1887, Michelson observed that the speed of light relative to an observer remains constant when the observer moves. This discovery later became a core tenant of Einstein’s special theory of relativity, but Michelson didn’t realize the full implications.

Modern Peer Review & The Increase of Specialization

The modern peer review system may be deeply flawed enough to slow scientific progress. Scientific journals have existed since the 17th century, but the formal peer review, omnipresent in academic scientific journals today, started with Nature in 1967. The other scientific journals quickly followed, and it became the standard for the most prestigious publications. Peer review and science are almost synonymous today—can you trust science that hasn’t been ‘peer reviewed’? The Broad Institute outlines that journals changed the method of publishing scientific articles because science was becoming increasingly ‘professionalized’ and the body of knowledge was increasing, creating a need for specialization. As fields become more specialized, the set of people who can scrutinize the work also decreases.

Being peer-reviewed provides a false sense of truth about an article. Nature surveyed scientists indicating more than 70% of researchers have tried and failed to reproduce another scientist’s experiments, and more than 50% have failed to reproduce their own experiments. The full extent of this reproducibility problem probably isn’t well known. A former editor-in-chief of the British Medical Journal published a critique of the peer review process, raising questions like ‘Who’s the peer’ and ‘What does it mean to be peer-reviewed?’ Peers could be competitors in the field, friendly colleagues, or non-experts. And are peers just confirming that the article is sensible, or are they doing the laborious work of digging through the details? Israeli Economist Brezis and publishing executive Birukou have shown that changing reviewers leads to significantly different results for two reasons: reviewers display homophily in their taste in ideas—reviewers who develop conventional ideas (the majority) rate innovative ideas lowly and vice versa, and there is variability in the reviewer’s abilities. There’s the risk that peer review tends to devolve into somewhat of a popularity contest where the default is reinforcing more conventional ideas.

Federal Government R&D Projects

Government spending on research and development may be the progenitor of much of the technological innovation later commercialized in the private industry. The US government, or at least certain parts of it, used to be extremely competent and could conduct large-scale, long-time horizon projects like the Manhattan Project and the Apollo Program. Maybe US federal R&D spending has been drastically misallocated, thus limiting core innovation.

Richard Rowberg outlines the history of federal R&D funding in his CRS report. Roosevelt created the OSRD to manage R&D during WW2. And the director of OSRD, Vannevar Bush, provided the framework for government R&D to Truman in his 1945 report ‘Science, The Endless Frontier.’ He argued for the importance of basic research, that it’s the ‘pacemaker of technological progress,’ and how it’s better supported through public funding. The NSF defines basic research as acquiring new knowledge of underlying phenomena without application, applied as focused toward a specific practical objective, and development as producing or improving products and processes.

Joseph Kennedy, the former Chief Economist for the Department of Commerce, pulls on NSF data to illuminate how Federal R&D spending is dominated by development research rather than basic or applied. This is largely due to the DoD’s large R&D budget, which is focused on weapon systems. Even DARPA only allocates a small portion of its, published, budget to basic research. Outside of the DoD and NASA, the NIH, DOE, and NSF do, however, spend significant amounts on basic and applied research. It seems like the government’s core competency is in the collection and redistribution of money and the management of the military-industrial complex and less on figuring out what’s the optimal way to allocate money to fuel scientific and engineering innovation. Outside of DoD spend, there is also likely a tendency to fund more conservative projects due to bureaucracy and, ironically, fear of wasting taxpayer money.

Increased Regulation & Risk Management

Regulation causes businesses to become more capital-intensive. This is largely why Computers & IT, one of the least regulated industries, was the main source of innovation over the last half-century. While the most heavily government-involved industries like healthcare, education, and housing tend to become exceedingly expensive and bloated with administrators. Regulation is necessary in many circumstances, but there’s the cost of limiting the rate of innovation and the tendency to lead to regulatory capture.

The increased focus on risk management also comes at the hidden price of funding more conservative R&D projects. But it’s very hard, in the early days, to predict future innovations originating from basic research—what fields will emerge as promising versus ending up as pseudoscience? Newton, who established classical mechanics, co-developed calculus, and made significant contributions to optics, also spent much of his life studying alchemy with goals like discovering the Philosopher’s Stone (to turn metals into gold) and the Elixir of Life. Was Newton superstitious, or was it just incredibly challenging to know which ideas would become most promising?

Orthodoxy & Luddite Fear of Technology

There seems to be a greater fear of technology today than in the past. People fear AI will take their jobs, their privacy will be gone, nuclear weapons will destroy the world, and bioweapons will proliferate. These are valid long-term concerns that deserve attention and careful thought. But this fear permeates people’s thinking and actions. Why work on building technology if it’ll destroy the world? This fear of future doom manifests itself obviously, but the relatively moderate and mild versions are more opaque and are Malthusian in nature: degrowth and incrementalism. Peter Thiel has spoken about this widely. Degrowth claims we must slow progress to save the environment because the world has finite resources. But it’s only because the world has finite resources that we need technology. Turning globalization up and technological progress down will exasperate environmental problems. Incrementalism is the least pernicious in degree but is probably the most widespread.

Deceleration in Fundamental Physics Research

Physics is the root catalyst of technology, so this is a potential factor to consider. This is a very challenging argument as it requires a determination of the importance of new physics discoveries. And it’s maybe impossible to predict what their future significance will be, but it’s nonetheless worth noting.

Can AI Save Us?

Technology is the solution, and the extent to which AI will raise TFP this decade will depend on the distribution of potential outcomes. Progress in AI would have to be as impactful as both the internet and personal computing combined to return to the 1994-2004 elevated level of ~1% average annual TFP growth. Today, many investors are underwriting the AI space on the basis that it’ll be as impactful as The Internet this decade.

AGI Scenarios - These scenarios are low probability enough and would result in such profound changes that I won’t spend much time exploring their implications.

Super-Human Intelligence. This is extremely unlikely to occur this decade. The significance of this would be akin to a much more advanced alien species making contact with humans and transferring all of their science and technology to us.

Human-level Intelligence. This is very unlikely to occur this decade but would result in an infinite amount of nearly free labor.

Non-AGI Scenarios - The division between these categories is blurry, but I think it’s helpful to differentiate between them.

Automation-level Intelligence. I’m defining this as being able to perform many computational white-collar labor tasks at a human-like level and without much instruction. This would entail automating most entry-level analytical work in software, accounting, engineering, consulting, and banking. This is possible this decade, but difficult to gauge the likeliness. This could be as impactful as the internet and personal computing combined.

Augmentation-level Intelligence. This is the most likely of the four options and what most investors are underwriting at some level—embedding AI throughout all software, and every person gets an intelligent assistant to help them throughout their personal and professional lives. This could be as impactful as The Internet, but the uncertainty lies in how capable these assistants will become—where on the spectrum they lie from current capabilities to #3-like capabilities.

The Limitations

Investors have seemed desperate to allocate capital to new sources of innovation for several years, which inevitably leads to technology bubbles. So it’s important not to give into wishful thinking, especially while the currently over-bloated venture industry is increasingly impulsive.

Anecdotally, I’ve found LLMs highly valuable for certain commercial applications like augmenting coding, editing writing, and in search. But chat-based LLMs tend to be less useful for retrieving specific pieces of information that would’ve otherwise taken me some time to find in reports through more typical search. They’ve indexed, synthesized, and cited, but the information for my queries often turns out incorrect. Summarizing articles and reports also can turn them general enough to be vague and mediocre. These systems will almost certainly continue to improve and are remarkable in their ability to reason, but it’s unclear how good they’ll become and on what time horizon.

The massive TFP growth between 1930 and 1970 resulted from technological innovation across most commercial industries. For progress in a single industry, like Computers & IT, to revive overall growth to a similar degree necessitates the advancement to be massively impactful. LLMs can augment people across industries, but they’re confined to the digital world. Many real-world processes can impede from the physical, like supply chain, manufacturing, and construction, to the regulatory, like privacy, safety, and permitting to the societal, like corruption, unions, and general unwillingness to change.

Some things humans are uniquely good at (at least until AGI):

Having Agency. Humans can direct and set long-term goals.

Creating explanations for Phenomena. Humans are universal explainers capable of creating knowledge and explaining phenomena.

Storytelling. Humans are purpose-built for telling and understanding complex stories.

The Opportunity

The promise of AI is alluring and it’s potentially the most compelling frontier technology. It’s the hope that we’ll get out of the recent stagnation further disguised by the ZIRP environment. A promising sign is that it’s unnerving to many people, and that’s because real change usually is—it’s scary. Driverless cars, industrial robotics, and general personal language assistants are all somewhere on the horizon, but that’s profoundly different than being here. The difference between a demo and large-scale business implementations could be decades.

Some of the more interesting and impactful applications include:

AI Personal Assistants: Every person could have their own personalized AI assistant to augment them in their personal and professional lives.

Omnipresent Personalization: AI should enable the hyper-personalization of content.

Autonomous Agents: AI can automate straightforward computational tasks.

Embedded Intelligence: As compute costs continue to decline, the cost of embedding intelligence everywhere decreases.

Software on Demand: The ability to create new applications on demand.

The Conundrum

If AI will be as impactful as The Internet this decade, what are the early signs that we’re experiencing a significant increase in productivity? LLMs are scaling faster than past technology shifts, so the effects should be apparent earlier. Sectors most reliant on low-level language work could become an order of magnitude smaller with AI by rewiring and rewriting enterprise workflows, or more likely, they shift labor around and become more productive businesses. Historically, technology hasn’t created more unemployment but changed the composition of jobs. Certain levers likely need to be unlocked to drive significant productivity, such as declining costs of compute, very low hallucination rates, and integrations with existing data systems and workflows. It’s difficult to understand where we are along these productivity curves, but at least today, things seem quite early. And at any rate of growth, capital is flowing with the pious hope that AI helps to pull us out of this chronic stagnation.