The Convergence

Humanizing Computer Interfaces through Language Models

There’s a bandwidth problem between humans and computers, as the only way to communicate involves significant friction. One of the earliest examples of communicating with computers was the command line—used to give instructions to a computer and receive some cryptic message back. We then moved on to GUIs which helped unlock massive adoption of the Web and serve as the mode for most computing today. Throughout the history of computing, there’s been a clear progression of these interfaces becoming more intuitive.

Elon Musk founded Neuralink to solve this bandwidth issue through a brain-computer interface that relies on implanting device in a person’s brain. This is a bit invasive. And we’re still far from communicating with computers using our neurons aside from navigational tasks like what CTRL Labs has built. But for those that aren’t suffering from a debilitating condition, natural language seems a more promising way to communicate with computers—and results in fewer holes in your skull.

The human brain has uniquely evolved over many years to understand and produce language. The singularity of the human brain, combined with the rapid advancement in NLP paves the way for natural language to be the next frontier of human-computer interfaces.

Advances in Natural Language Processing

Transformer language models have catalyzed a shift in conversational AI technology over the last several years. A transformer is a deep learning model that uses the concept of ‘self-attention’, meaning it pays more attention to some parts of the input data than others. Transformers also process sequential input in a parallelized way, which allows for training on very large datasets.

These language models are trained on written human content to predict and generate text as well as to reason quantitatively. OpenAI’s GPT-3 (Generative Pretrained Transformer) showed that large, pre-trained language models can achieve high performance with minimal task-specific training.

The simple ‘chatbot’ companies with basic functionality are now becoming more advanced ‘conversational AI’ ones. They’ve gotten accurate enough that they can pass the Turing Test, but only for some human evaluators. This probably speaks more to how easily people are tricked than the model’s true intelligence.

Some significant recent developments include Google AI’s PaLM and Minerva as well as OpenAI’s Proof Solver detailed below.

Google AI’s PaLM

In April of 2022, Google AI published their Pathways Language Model (PaLM), a 540 billion parameter model that achieves state-of-the-art few-shot performance across many types of tasks. PaLM is a dense, decoder-only transformer model. It’s trained with Google’s Pathways system across TPU pods. PaLM was evaluated on widely-used NLP tasks and surpassed the performance of past state-of-the-art models on tasks ranging from Q&A to sentence competition, comprehension tasks, and common-sense reasoning.

Google AI’s Minerva

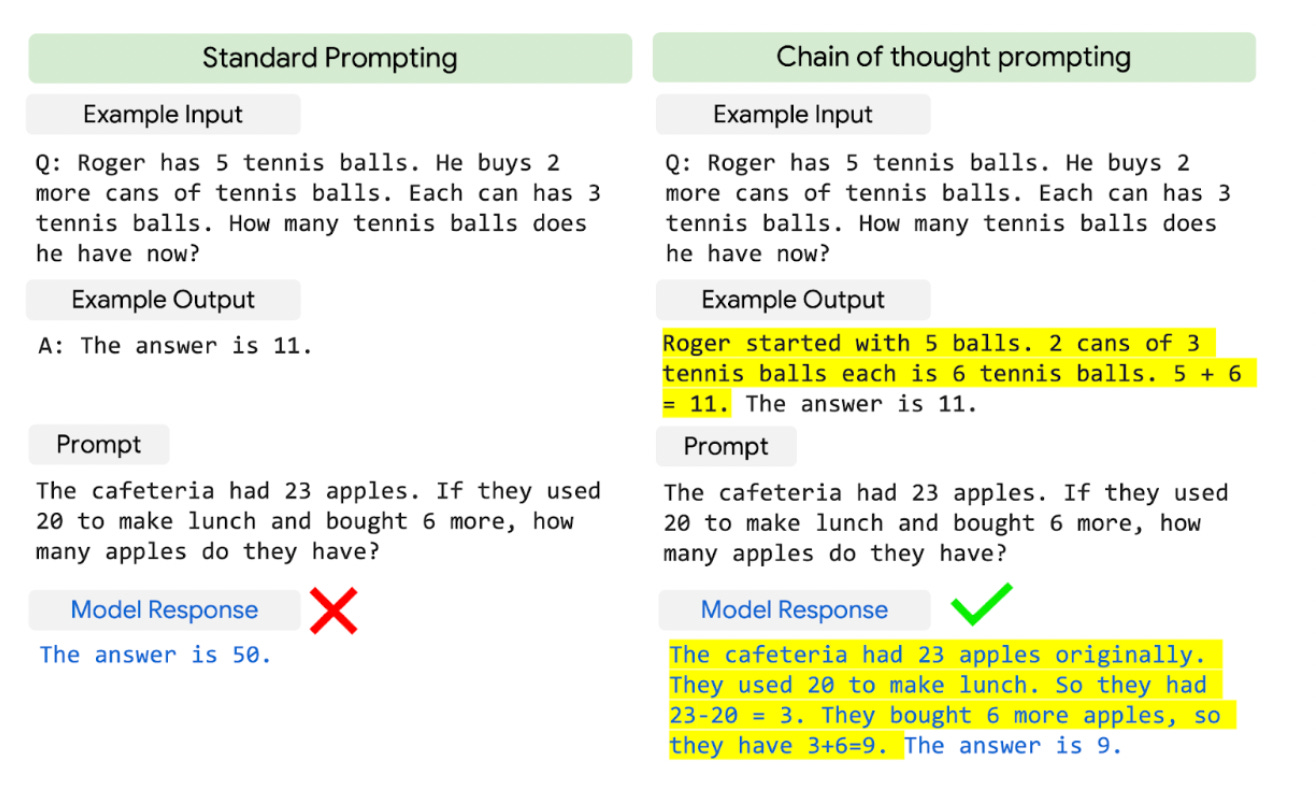

In June of 2022, Google AI published their Minverva model, which solves quantitative reasoning problems. The model is able to parse a question, recall relevant mathematical procedures, and generate a solution involving calculation and symbolic manipulation. Minerva uses few-shot prompting, chain of thought, and majority voting to achieve state-of-the-art performance. Minerva builds on the PaLM model with additional fine-tuning on a dataset of scientific papers. The model works by processing natural language and LaTeX expressions, but not the underlying mathematical structures.

OpenAI’s Proof Solver

In February of 2022, OpenAI published a language model to find proofs for formal statements and Math Olympiad questions. OpenAI uses every incremental proof as new training data, which improves the model’s ability to iteratively find solutions to increasingly complex problems. The solver uses search procedures that take mathematical terms, and each procedure transforms the current statement into subproblems until it’s complete.

Ubiquitous Computing

Computation used to be confined to distinct areas of human life, first with mainframes in some rare work settings, then in the home with the PC revolution, and now on our smart devices in our pockets with the mobile revolution. The next iteration to make computing increasingly ubiquitous is through unlocking spoken language, or voice, as a medium.

Today, using voice commands on smartphones is still a novelty, useful for only a small subset of computing tasks. Only simply queries are tolerated and are often misunderstood. Using voice commands is also disruptive in the presence of other people and leveraging voice in a business setting is even more rare.

Being able to communicate with your phone in a conversational way, rather than giving explicit, awkward commands that require confirmation allows for actual ubiquitous computing. This is seemingly part of the vision of many big tech companies with the launching of their home voice products, but friction remains high.

Optimizing voice technology is critical to make computing ever more available. And smartphones do seem like a natural extension to serve as the hardware layer since they’re omnipresent. As speech and language models improve and become productized, voice will become a powerful new computing interface for both the consumer and enterprise worlds.

The Role of Programming

Programming is a means of giving a computer instructions on what to do. It involves iteratively breaking down problems into subproblems and converting those subproblems into instructions that computers can understand. A programmer has to think of instructions in some high or low-level computer language, which can eventually be turned into 0s and 1s that the computer can make sense of. It’s much more intuitive, however, to just think of solving problems and giving computer instructions through natural language.

OpenAI’s Codex, a descendant of GPT-3 and published in August 2021, is an AI system that converts natural language to code and powers GitHub’s copilot product. Codex has demonstrated the ability to program computers by using language, which is less precise but more intuitive. If anyone could use language to code, then everyone could design, build, and test software systems. This is the no-code shift.

What’s the correct level of abstraction? At some sufficiently low level of granularity, it makes sense to just write the code directly. But this may just be a parochial view, and perhaps there is no limit to how ML models may optimize instructions at low levels of computation.

In February of 2022, Deepmind published AlphaCode, which use language models to generate code that can solve competitive programming questions. The transformer model is pre-trained on open source GitHub repos and fine-tuned on competitive programming data. Remarkably, it has achieved a rank within the median competitor in programming competitions.

Using natural language to create software is a compelling new way to interface with computers.

Human-Machine Alignment

Language models need to align with humans in order to serve as efficient and trusted computer interfaces. The alignment problem is defined as how to minimize the gap between what humans want a model to do and what the model actually does. An alignment issue we face today is that ML models are currently built into most platforms for discovery to optimize usage, but that’s often not what the user actually wants.

OpenAI’s GPT-3 is trained to predict the next word given past words, not to directly solve the language task the user wants. To remedy this, OpenAI fine-tunes GPT-3 through reinforcement learning with human feedback to improve alignment.

Model Explainability

It’s not entirely clear what having large language models as the primary computing interface will entail. Tasks will become more intuitive and more efficient, but the abstraction goes so deep that explainability will be key. Individuals will need to have a deep trust in whatever language models they’re using to safely manage significant portions of their personal and professional work. Adding a neural network blackbox layer between humans and all the data they’re querying will require robust transparency.

Google Search

Today, ML models are built in certain applications largely for discovery and recommendations. One significant example where the primary interface of a product is expressed through language models is Google Search, one of the most financially successful technology products of all time. This makes sense given that search is a highly language-based product.

The primary way to parse the web is ML-driven, as Search utilizes language models for its user interface. Google evaluates search queries by semantically understanding user queries and ranking information sources. Simple queries can result in a direct answer, but long and complex ones often lack a declarative response.

When initially launched, Search just matched text between a query and a page. Today, every search undergoes some combination of hundreds of specialized ML models. There are four major ML systems that Google currently uses: RankBrain, Neural Matching, BERT, and MUM.

RankBrain was launched in 2015 and was the first deep learning system used in search, enabling semantic understanding between words in a search and concepts. Neural Matching was introduced in 2018 to help better understand how queries relate to webpages and unlocked matching of broader representations of concepts.

Google then launched BERT (Bidirectional Encoder Representations from Transformers) in 2019. BERT allowed Search to understand how combinations of words lead to different meanings. Search could now understand whole sequences of words and their relationships. The last major milestone came in May of 2021, when Google launched MUM (Multitask Unified Model), which can understand and generate language across many languages and tasks. MUM is also, uniquely, multimodal and can process both text and images.

Google’s Search is not highly explainable. Putting aside ads, it’s not clear to the user how the rankings work, nor are they necessarily trusted. Users know they’re sometimes not getting the best results for their queries, and certain information sources are hidden. Search products are also becoming more verticalized across different platforms, whether that’s Amazon for commerce, Reddit for communities, or Tik Tok for videos.

Going Superhuman

Communicating with a computer shouldn’t be just as low-friction as communicating with a person—it should be even easier. Different languages and accents frequently disrupt person-to-person communication across the world. Language models can fix these disruptions by better understanding speech and intent.

Models are trained to understand the semantic meaning behind queries, which people themselves are often unable to parse. As humans, we naturally disguise what we say, purposefully to maneuver around a point or to appear polite, and accidentally when we simply can’t communicate clearly. Computers are increasingly better at understanding many nuances of human language like emotion and tone. “What does this person actually want” is a difficult question to answer, and it relates back to the alignment issue.

Anthropomorphism

Language models have the potential to serve as a new dominant type of human-computer interface. Consumers have already experienced ML models that permeate many of the product experiences they encounter today. These language models are not actually moving towards true human-like understanding, but are certainly appearing more human-like in ability.

Technology continues to evolve to remove friction, and user interfaces are no exception. The human-machine convergence will happen one way or another—either with humans thinking more machine-like or computers ‘thinking’ more human-like. My bet’s on the latter.